A comprehensive guide to implementing ensemble methods for financial fraud detection can be found on my Github repository:

The Impact of Fraud on Global Economies

Fraud is a significant problem that costs economies billions of dollars every year. Recent surveys show that fraud is becoming more common. In 2018, almost half of the companies surveyed by PwC had experienced some type of fraud. This is a big jump from the 36% reported just two years earlier in 2016.

To fight fraud effectively, we need to use the best data analysis methods available. Traditional techniques have worked in the past, but now we are looking at different strategies. The goal is to understand the strengths and weaknesses of various learning models. This will help us identify the model that gives the most accurate predictions.

Methods for Spotting Fraud

Detecting fraud requires careful analysis across fields like finance and economics. Some common statistical data analysis methods include:

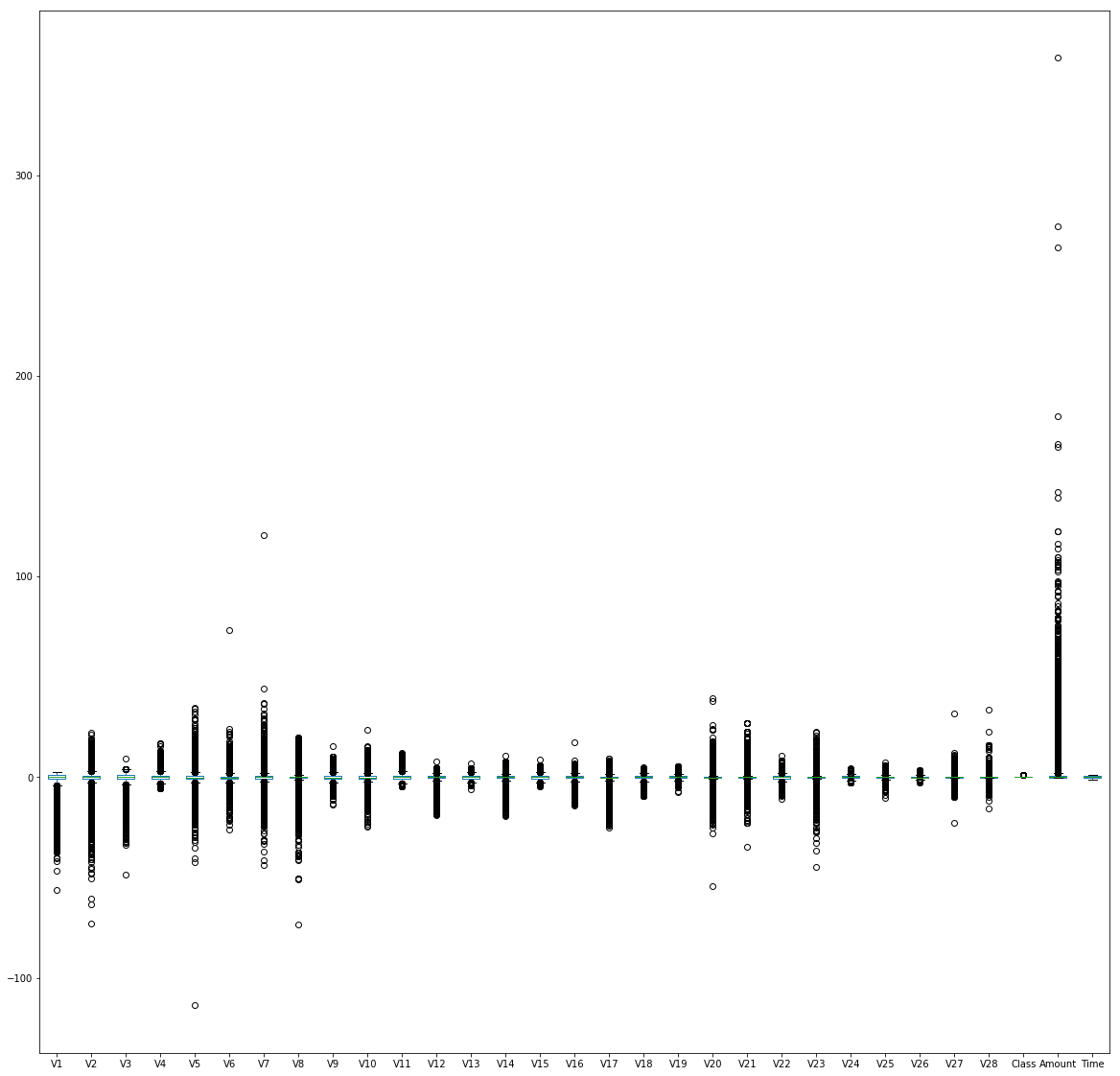

- Pre-processing techniques to find, validate, and fix errors or missing data

- Calculating key statistics like averages, quantiles, performance metrics, and probability distributions

- Analyzing time-dependent data over time (time series)

In this case, we are using supervised learning models, not including clustering methods. These models can only identify frauds that are similar to ones that humans have already flagged and classified.

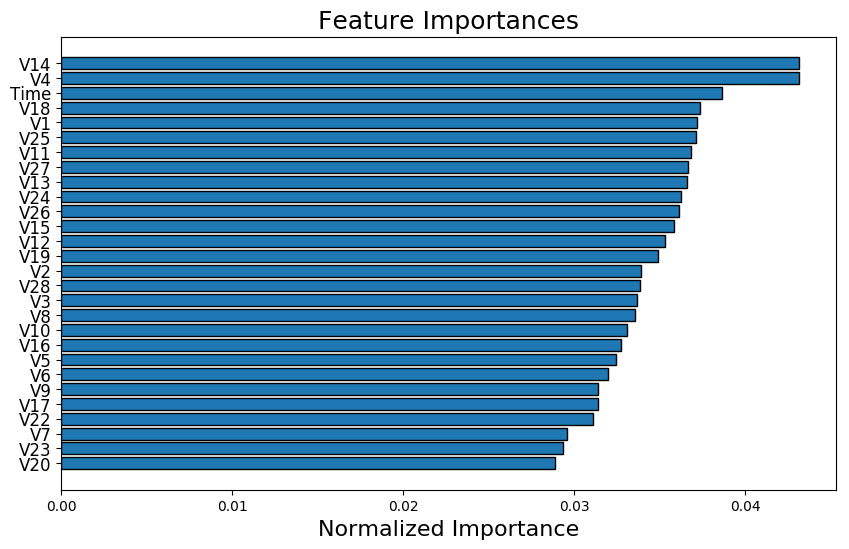

For credit card fraud detection, ranking is tricky. We need to build smart models that can accurately label transactions as real or fraudulent. The models use transaction details like the amount, merchant, location, time, and other factors to make this decision.

Ensemble Methods: Benefits and Challenges

We have found ensemble methods to be a promising approach for this problem. Methods based on decision trees are appealing because they are designed to avoid overfitting.

However, improper sampling can skew the apparent quality of a model. The main goal of model validation is to assess how well the model will work on new data. If we decide to use a model based on how it performs on a validation set, we must oversample correctly.

By only oversampling the training data, we avoid using any information from the validation data to generate synthetic observations. This helps ensure the results will apply to new data.

Oversampling can potentially improve models trained on unbalanced data, but it’s important to remember that incorrect oversampling can create a misleading picture of a model’s ability to generalize.

Our results may appear modest compared to some published online, but it’s essential to use proper cross-validation with an appropriate sampling method and metric when working with imbalanced data. This gives a more realistic assessment of the model’s performance.