Language models (LMs) form the core of modern AI, powering applications from chatbots to content generation. While highly useful, these models can sometimes generate undesirable content that is misleading, harmful, or irrelevant. Reinforcement Learning from Human Feedback (RLHF), which uses human evaluations of LM outputs to guide learning, offers a potential solution.

The Fine-Grained RLHF project takes RLHF to the next level by incorporating more detailed human feedback.

A Fresh Take on Language Model Training

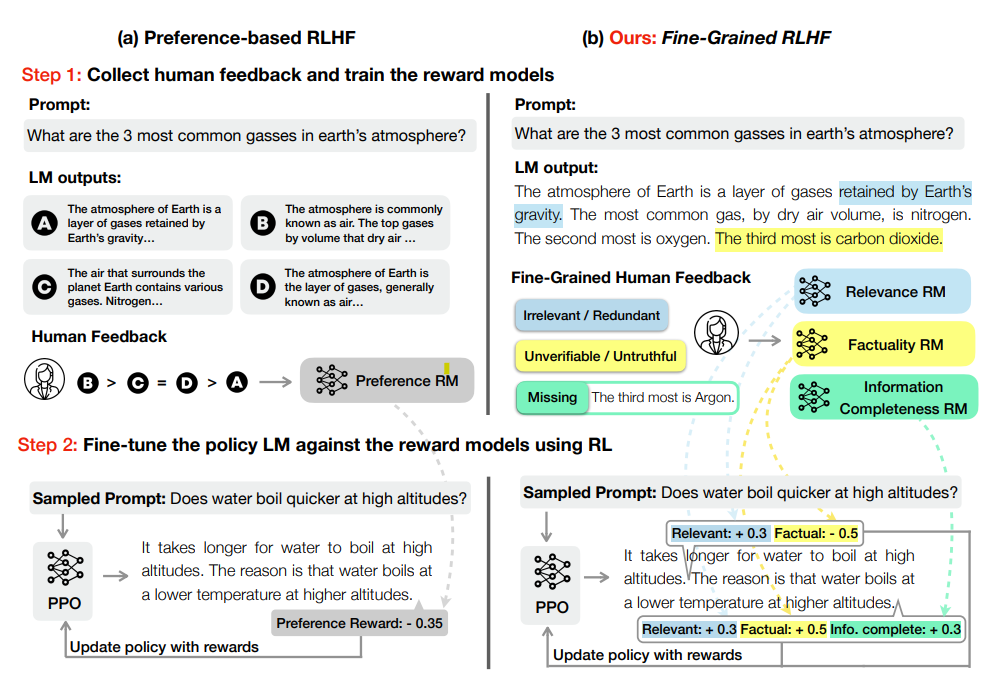

The Fine-Grained RLHF paper offers a new perspective on language model training. It argues that the broad feedback typically used in RLHF on long text outputs often lacks detail. This makes it hard to identify which parts of the output affected user preference or where mistakes were made.

The solution proposed is to use fine-grained human feedback that provides a clearer training signal to improve model performance. The Fine-Grained RLHF framework innovates in two main ways:

- Density: It provides a reward after each generated segment (e.g., a sentence).

- Feedback Types: It uses multiple reward models tied to different feedback types, such as factual errors, irrelevance, and missing information.

This allows the model to learn from more specific feedback, potentially leading to better performance.

Insights from Twitter

A tweet thread by Surge AI provides more insights into the benefits of this approach. It highlights how standard RLHF LMs rely on broad human preference judgments that give limited information for long text outputs.

In contrast, the Fine-Grained RLHF model aims to identify exactly which parts of the outputs affected user preference, using detailed human feedback as a clear training signal. The new framework allows learning from reward functions that are fine-grained in two ways:

- Providing a reward for each generated segment

- Using multiple reward models for different feedback types

The Perspective API’s toxicity scores are used to assign rewards to sentences. Fine-grained RLHF with sentence-level rewards achieves the lowest toxicity and perplexity compared to all other methods.

Key Takeaways

-

The paper presents a new framework for training language models using detailed human feedback. This provides a clearer training signal that can improve performance.

-

It demonstrates that detailed human feedback can be more sample efficient than broad rewards. The model can achieve lower toxicity with fewer training steps while maintaining better fluency.

-

It offers a practical implementation of the fine-grained RLHF approach. Experiments on the REALTOXICITYPROMPTS dataset show its effectiveness.

Questions Raised

The paper raises several interesting questions:

- Can detailed human feedback improve language model training?

- Can such feedback provide a clearer training signal that enhances performance?

- How does detailed human feedback compare to broad rewards in terms of sample efficiency?

Innovations

-

A new way of training and learning from reward functions that are fine-grained in density (providing a reward after each generated segment) and feedback types (using multiple reward models for different feedback types).

-

Use of the Perspective API’s toxicity scores to assign rewards to sentences, demonstrating a practical application of fine-grained human feedback.

-

A detailed analysis of the fine-grained RLHF approach, including a sample efficiency analysis showing how learning from denser fine-grained reward can be more efficient than holistic reward.

Conclusion

The paper presents a groundbreaking approach to training language models that could lead to major performance improvements. By using detailed human feedback, it provides a clearer training signal that can boost the model’s learning efficiency.

Results from the experiments in the research paper highlight the promise of this approach, showing lower toxicity and better fluency in language model outputs. This represents a significant step forward in language model training.

As language models continue to advance and grow more powerful, methods like Fine-Grained RLHF will be crucial for ensuring they are trained and controlled effectively. It will be exciting to see how this approach evolves and shapes the future of language model training.