Large Language Models (LLMs) have made significant progress in understanding and processing natural language. However, using tools through API calls remains a challenge for these models. Researchers from UC Berkeley and Microsoft Research have developed Gorilla, a finetuned LLaMA-based model that excels at writing API calls, surpassing GPT-4.

What is Gorilla?

Gorilla is an LLM trained on three large machine learning hub datasets:

- Torch Hub

- TensorFlow Hub

- HuggingFace

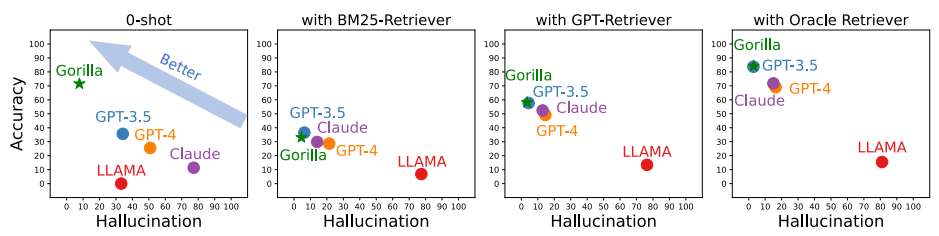

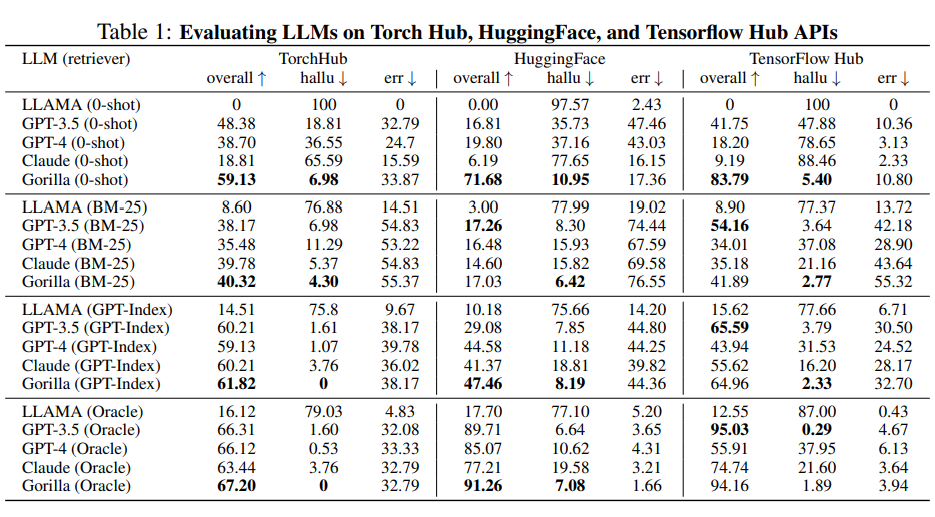

In a zero-shot setting, Gorilla outperforms GPT-4, Chat-GPT, and Claude. It reduces hallucination errors and improves reliability.

LLMs often struggle with generating accurate input arguments and using API calls correctly.

APIBench Dataset

To evaluate Gorilla, researchers created the APIBench dataset, which includes:

- All API requests from TorchHub and TensorHub

- Top 20 models from HuggingFace for each task category

- Ten fictional user query prompts for each API using the self-instruct method

Gorilla’s Capabilities

When paired with a document retriever, Gorilla can:

- Adapt to test-time document changes

- Enable flexible user updates or version changes

- Substantially reduce hallucination issues

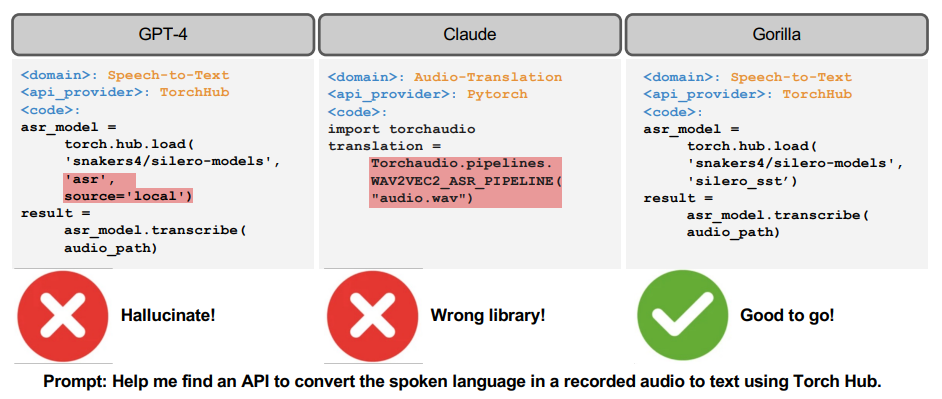

In tests, Gorilla correctly identified tasks and provided fully-qualified API results. In contrast, GPT-4 generated API requests for non-existent models, and Claude selected the wrong library.

Key Contributions of Gorilla

- APIBench Dataset: A comprehensive dataset of APIs from HuggingFace, TorchHub, and TensorHub for further research and development.

- Document Retriever Integration: Enables adaptation to test-time document changes and flexible updates.

- Reduced Hallucination Errors: Improves the reliability of the model’s outputs.

Questions Addressed by Gorilla

- How can LLMs effectively use tools via API calls?

- How can LLMs adapt to test-time document changes?

- How can the issue of hallucination be mitigated?

Innovations of Gorilla

- Finetuned LLaMA-based Model: Surpasses GPT-4 in writing API calls.

- Improved API Call Generation: Enhances applicability and reliability of results.

- Keeping Up with Updated Documentation: Provides users with more accurate and current information.

Conclusion

Gorilla represents a significant advancement in LLMs, addressing the challenge of writing API calls. By reducing hallucination problems and improving reliability, Gorilla demonstrates the potential for LLMs to use tools more accurately and keep up with frequently updated documentation.

For more information, check out the Paper, Github Link, and Project Page.