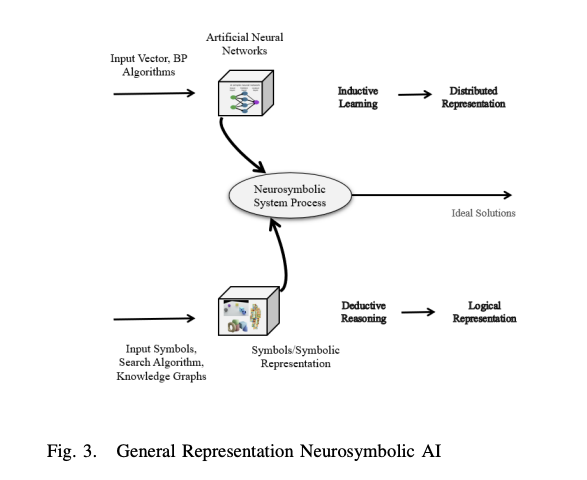

Neurosymbolic reinforcement learning and planning is an exciting field that combines two distinct areas: the structured, rule-based world of symbolic reasoning and the adaptive, data-driven world of neural networks. This combination enables AI systems to learn from real-world data while utilizing the logical clarity of symbolic reasoning.

Combining Symbols and Neurons

Symbolic reasoning operates within well-defined rules and structured relationships, allowing for precise understanding and manipulation of complex concepts.

On the other hand, neural networks excel at learning patterns from large amounts of unstructured data and applying that knowledge to new situations.

The neurosymbolic approach aims to combine the strengths of both symbolic reasoning and neural networks. But how does this integration work in reinforcement learning and planning?

Neurosymbolic Integration in Reinforcement Learning and Planning

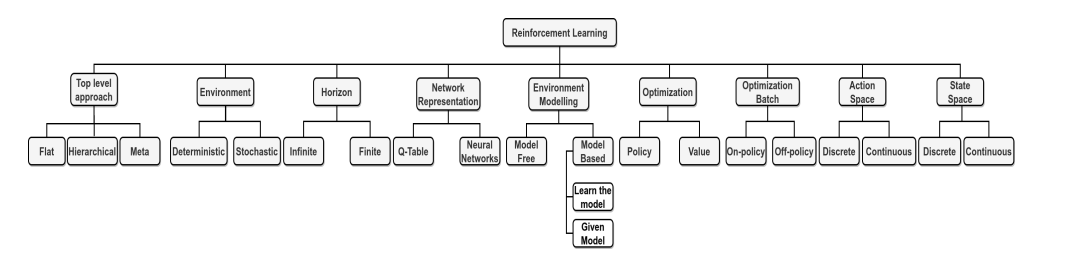

In reinforcement learning (RL), an agent learns to interact with its environment to achieve a goal. The feedback from the environment helps the agent understand the consequences of its actions.

Planning involves determining a sequence of actions based on a model of the environment before executing them.

Here’s how neurosymbolic integration works in RL and planning:

Symbolic Components:

-

Structured Representation: Symbolic reasoning provides a structured way to represent the environment, goals, and actions. This is important in complex scenarios where understanding relationships between entities is necessary.

-

Logic-based Decision Making: Symbolic components enable the agent to reason through the implications of different actions and choose the most logical course of action.

Neural Components:

-

Learning from Data: Neural networks allow the agent to learn from data and adjust its behavior based on feedback from the environment.

-

Generalization: Neural components help apply learned knowledge to new, unseen scenarios, making the agent more adaptable.

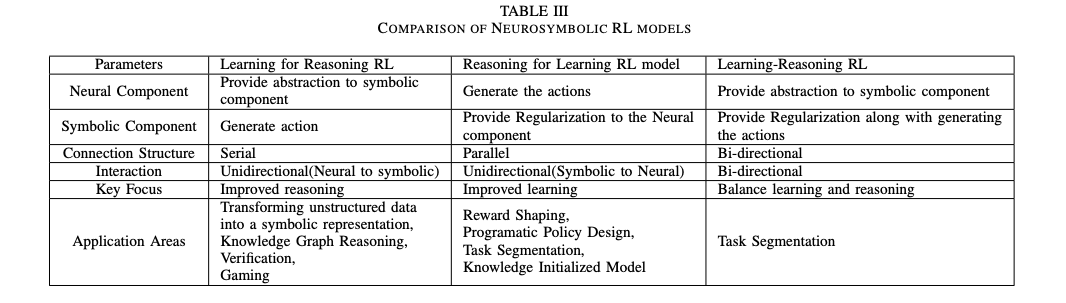

Neurosymbolic RL and planning aim to combine symbolic reasoning with neural learning to create AI systems that can reason logically, learn from interactions, and apply learning to new situations.

Overview of the Survey

The survey provides a comprehensive review of recent progress in neurosymbolic RL and planning. It covers various approaches for integrating symbolic reasoning with neural learning, the challenges involved, and potential future directions.

Key topics in the survey include:

-

Neurosymbolic Architectures: Different architectures that enable the integration of symbolic and neural components, each with its own benefits and challenges.

-

Training Neurosymbolic Systems: The survey explores various training strategies to ensure the symbolic and neural components work together effectively.

-

Applications and Examples: Illustrative examples and case studies where neurosymbolic RL and planning have shown promise.

-

Challenges and Future Directions: The survey discusses the obstacles in neurosymbolic integration and potential future research directions to address these challenges.

Conclusion

Creating AI that can learn like humans, reason like computers, and act intelligently in complex environments is a challenging but exciting goal. The neurosymbolic approach offers potential ways to bridge current gaps in AI research. As we continue to explore the neurosymbolic realm, the vision of creating robust, interpretable AI systems capable of sophisticated reasoning becomes more attainable.