The paper “Simplifying Transformer Blocks” by Bobby He & Thomas Hofmann provides important insights into making transformer architectures more efficient. Transformers are critical in machine learning, especially in natural language processing (NLP) and computer vision. However, they often face challenges due to their complex designs, which can slow down training and inference. This study looks at ways to simplify transformer blocks without hurting training speed or performance. It addresses the gap between simple theories and complex real-world implementations in neural network architectures.

You can find more information about Simplifying Transformer Blocks at the GitHub repository and read the full paper here.

Key Contributions:

-

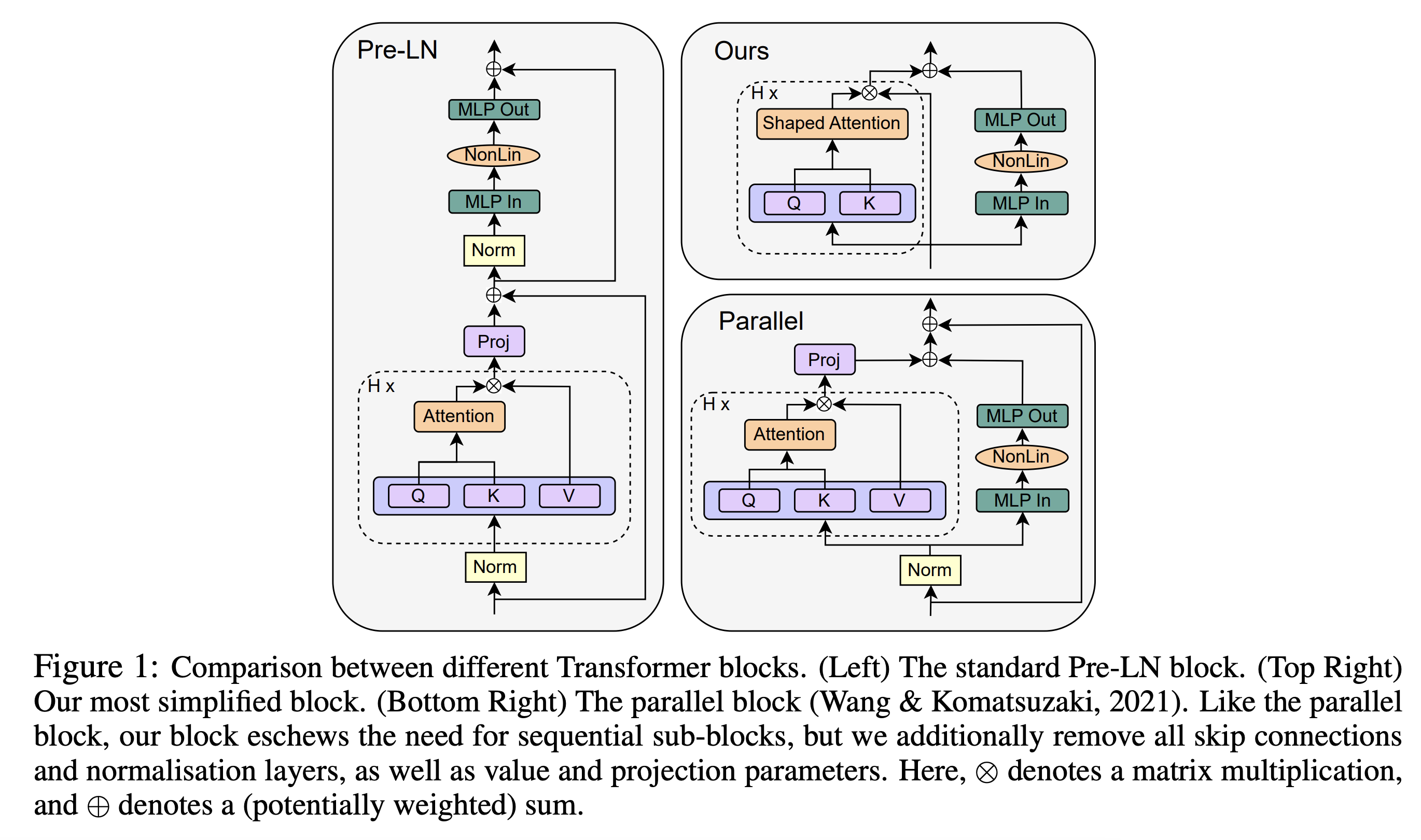

Reduced Complexity: The authors question the need for several standard parts in transformer blocks, like skip connections, value/projection matrices, and normalization layers. They suggest removing these to simplify the architecture without impacting training effectiveness.

-

Efficient Signal Propagation: The paper uses signal propagation theory and experiments to show that these simplifications still allow for effective learning and signal processing. Signal propagation is an influential theory in designing deep learning architectures, and this work expands on it.

-

Parameter Reduction: Removing these components significantly reduces the parameter count by up to 16%, leading to a simpler, more efficient architecture.

-

Increased Throughput: Despite these simplifications, the model shows a 15% increase in training throughput, speeding up model training and application.

Detailed Look at Simplifications:

-

Attention Sub-block Skip Connection: This part, usually seen as essential for performance, is removed. This addresses issues like signal degeneracy and rank collapse without hurting trainability.

-

Value and Projection Parameters: The paper shows that value and projection parameters can be removed or set to identity. This greatly reduces the model’s complexity without affecting training speed.

-

MLP Sub-block Skip Connection: The research questions the need for this component, which is common in transformer designs. It successfully removes it without losing training speed.

-

Normalization Layers: The study re-evaluates the role of normalization layers in transformers. It removes them based on the idea that their effect can be replicated by other means like downweighting residual branches or biasing attention matrices.

Implications:

These findings could greatly influence how transformer models are designed and used, especially in fields that need a lot of computing power, like computer vision. The approach balances efficiency and performance, addressing one of the major challenges in deep learning.

For a full understanding of the methods, experiments, and findings, read the complete paper here.