Federated learning (FL) relies on clients providing local model updates to train a global model collaboratively. To ensure fair rewards and effective client selection, measuring each client’s contribution is essential. However, current methods often struggle with high computational requirements, dependence on global validation datasets, and susceptibility to malicious clients.

Zhang et al. propose FRECA, a Fair, Robust, and Efficient Client Assessment method that tackles these challenges head-on.

Why Existing Client Assessment Methods Fall Short

Evaluating client contributions in FL is difficult for several reasons:

- Clients have non-independent and identically distributed (non-IID) data

- Malicious clients can introduce noisy or divergent updates

- Access to clients’ local data or a global validation dataset is often restricted

Popular approaches like Shapley value and leave-one-out (LOO) can be computationally intensive and overlook the impact of Byzantine-resilient aggregation strategies on client contributions.

How FRECA Addresses the Challenges

FRECA uses a framework called FedTruth to estimate the ground truth of the global model update. This approach balances contributions from all clients while mitigating the influence of malicious ones. FRECA introduces two key metrics:

- Client Performance Evaluation Metric: Assesses the difference between a client’s local model update and the estimated ground truth using the aggregation weight assigned by FedTruth.

- Net Contribution Evaluation Metric: Measures a client’s net contribution to the global model based on their share in the gap distance between the estimated and actual ground truth.

By incorporating FedTruth’s Byzantine-resilient aggregation algorithm, FRECA is robust against attacks. It also operates solely on local model updates, eliminating the need for a global validation dataset, making it computationally efficient.

Experimental Results Validate FRECA’s Effectiveness

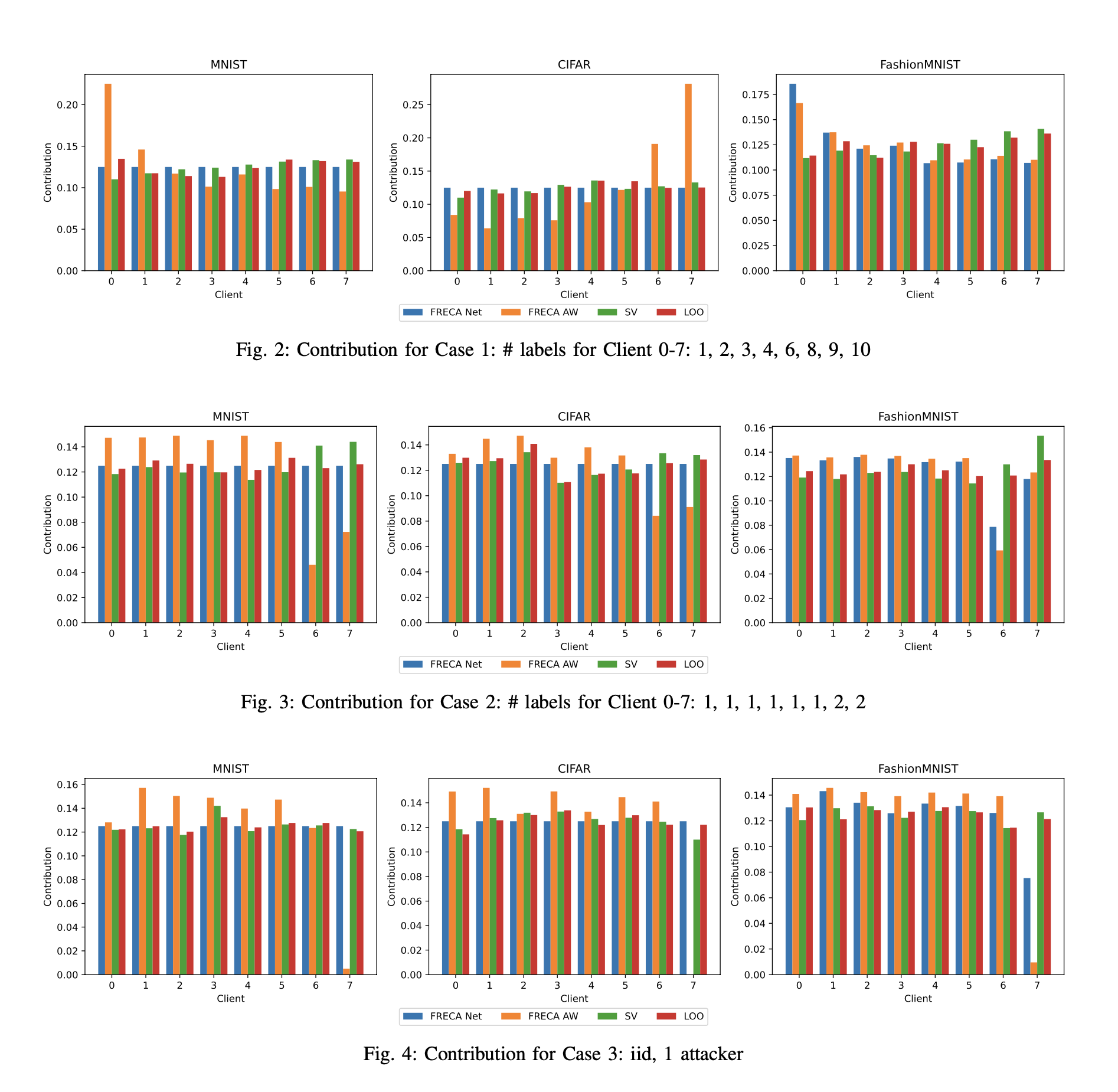

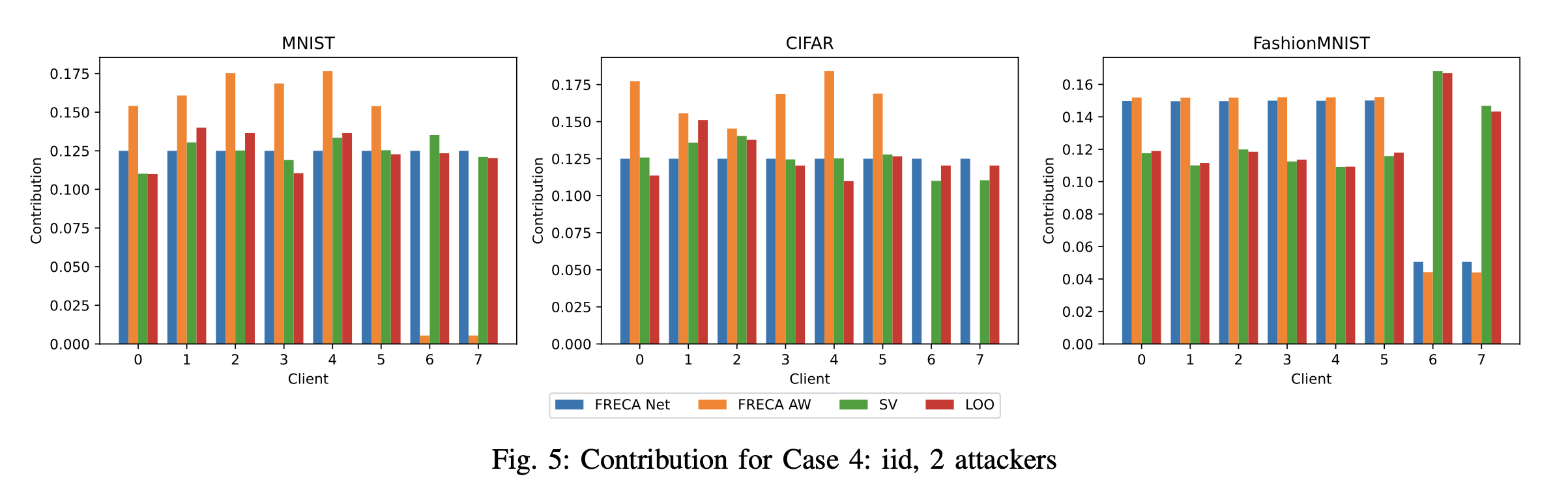

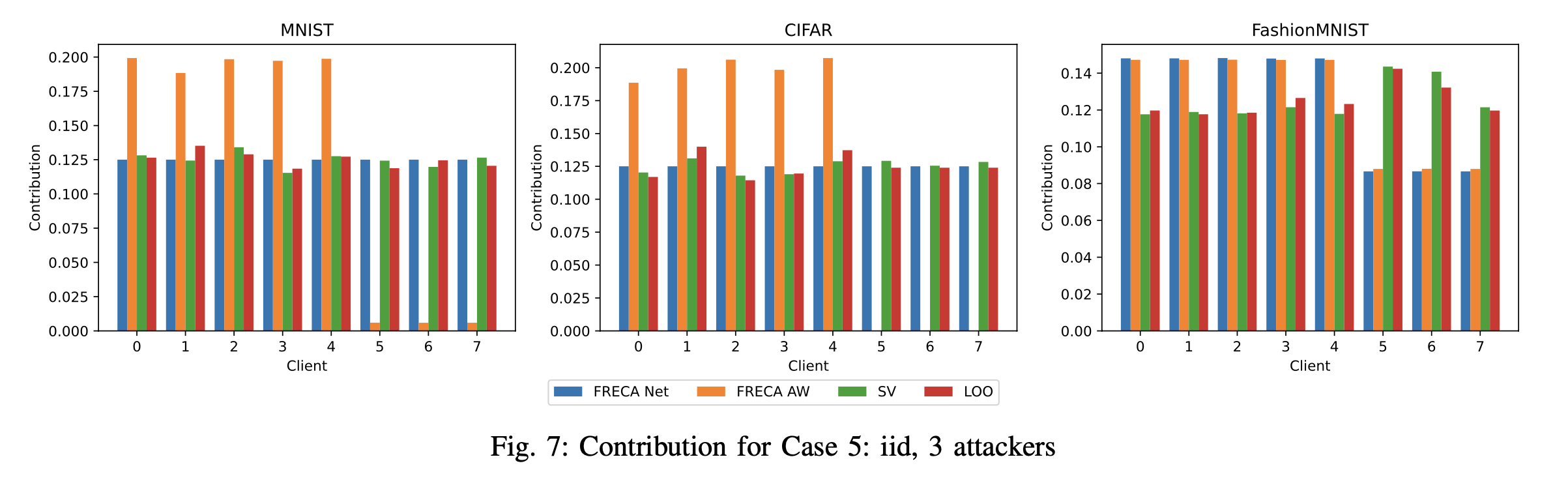

The authors test FRECA on MNIST, CIFAR-10, and FashionMNIST datasets under various conditions, including non-IID data distribution and the presence of malicious clients. The results demonstrate:

- FRECA’s net contribution metric closely matches Shapley value and LOO approaches in most cases, confirming its ability to evaluate client contributions accurately.

- FRECA’s aggregation weight metric effectively identifies malicious clients by assigning them near-zero values, reducing their impact on the global model.

- FRECA is computationally efficient, consuming significantly less time than Shapley value and LOO methods.

The Future of Fair and Robust Client Assessment in FL

As federated learning gains popularity, methods like FRECA will be crucial for ensuring equitable compensation and effective client selection. By addressing the limitations of existing approaches, FRECA provides a reliable and efficient way to assess client contributions, promoting fairness and robustness in FL systems.

With its combination of innovative metrics and the FedTruth framework, FRECA represents a significant step forward in quantifying client contributions in federated learning. As more researchers build upon this foundation, we can expect even more advanced and refined methods to emerge, driving the adoption and success of FL in various domains.