Federated learning (FL) enables collaborative model training across multiple devices while preserving data privacy. However, ensuring fairness among participating clients is a critical challenge. Shayan Mohajer Hamidi and En-Hui Yang introduce AdaFed, an approach that adaptively tunes the common descent direction to promote fairness without compromising model accuracy.

The Fairness Challenge in Federated Learning

Data heterogeneity across clients in FL can lead to unfair model performance. Some clients may experience poor accuracy compared to others. Traditional FL algorithms like FedAvg, which average local gradients, may not guarantee fairness. AdaFed addresses this by finding a common descent direction that benefits all clients while prioritizing those with larger loss values.

AdaFed: Adaptive Common Descent Direction

AdaFed finds a common descent direction that:

- Decreases the loss functions of all clients.

- Prioritizes clients with larger loss values, ensuring a higher rate of decrease.

AdaFed employs a two-phase approach:

- Orthogonalization: The server generates mutually orthogonal gradients spanning the same subspace as the original local gradients.

- Optimal Weight Calculation: The server finds optimal weights for the orthogonal gradients, minimizing the norm of their convex hull, yielding a closed-form solution for the common descent direction.

AdaFed adaptively tunes the common descent direction based on each client’s loss function, promoting fairness throughout the FL process.

Convergence Analysis and Experimental Validation

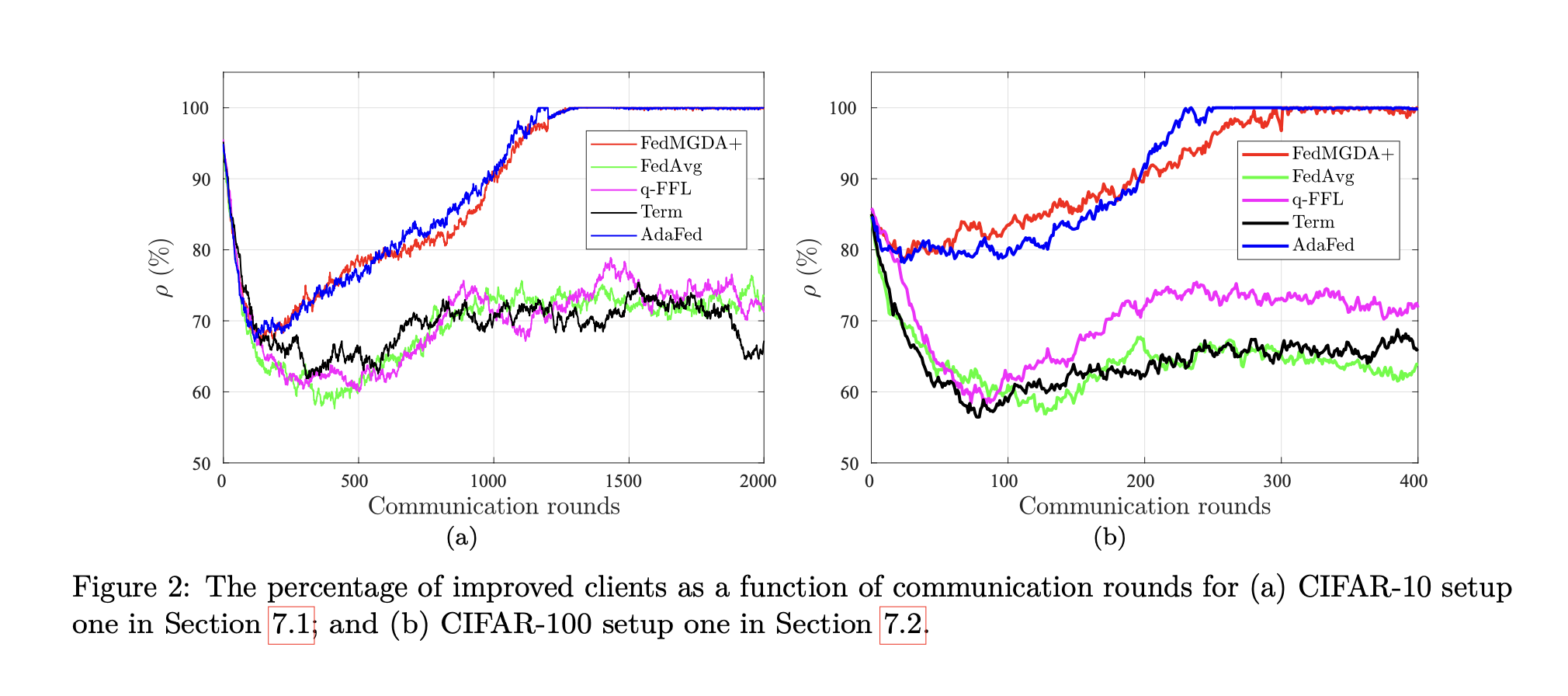

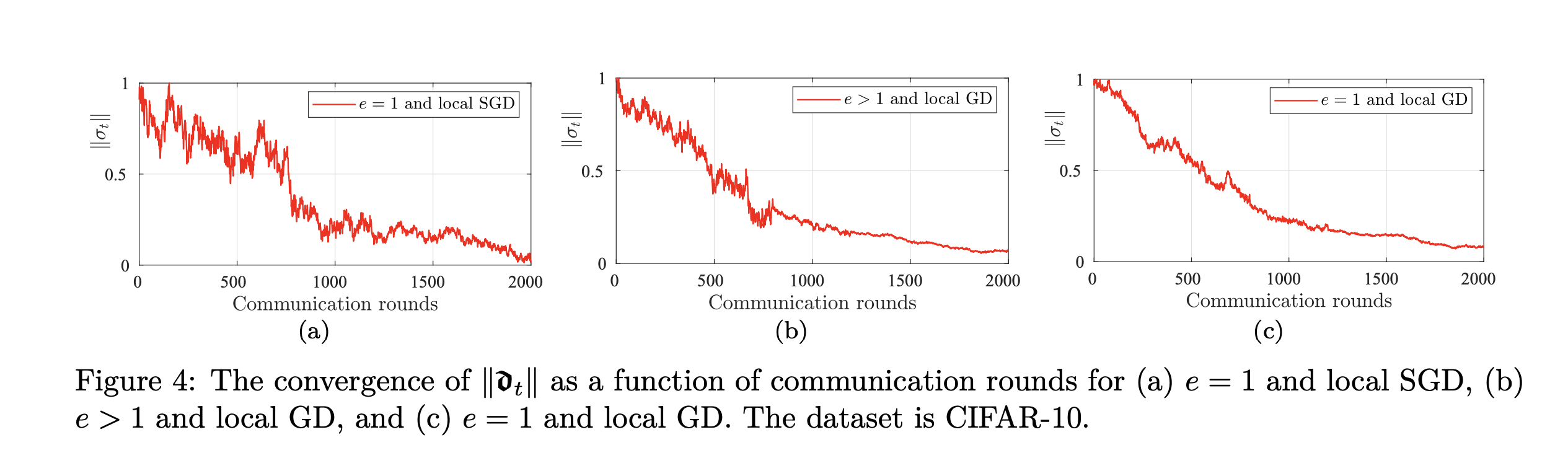

The authors prove AdaFed’s convergence to a Pareto-stationary solution under different FL setups. Experiments on datasets like CIFAR-10, CIFAR-100, FEMNIST, and Shakespeare demonstrate that AdaFed achieves higher fairness among clients while maintaining accuracy comparable to state-of-the-art fair FL methods.

Integration with Label Noise Correction

AdaFed can be integrated with label noise correction methods like FedCorr to address mislabeled instances in real-world datasets. Experiments on the Clothing1M dataset show that combining AdaFed with FedCorr improves average accuracy while maintaining fairness among clients.

Conclusion

AdaFed presents a novel approach to address fairness in federated learning. By adaptively tuning the common descent direction based on clients’ loss values, AdaFed ensures all clients benefit from collaborative learning, with a higher rate of improvement for those with larger losses. As FL advances, techniques like AdaFed will be crucial for enabling fair and inclusive collaborative learning across diverse clients.