Federated learning has emerged as a promising approach for training machine learning models on distributed datasets while preserving user privacy. However, the traditional method of adding constant noise to all model parameters to protect sensitive information often leads to reduced model performance. In their recent paper, Talaei and Izadi introduce an adaptive differential privacy framework that adjusts noise levels based on the importance of different features and parameters, aiming to find a better balance between privacy and accuracy.

The Privacy-Accuracy Trade-off

Differential privacy techniques in federated learning protect user data by adding noise to model parameters. While this approach maintains privacy, it can significantly degrade the resulting models’ accuracy. The challenge lies in finding the right balance between these two critical aspects.

Adaptive Noise Injection: A Novel Solution

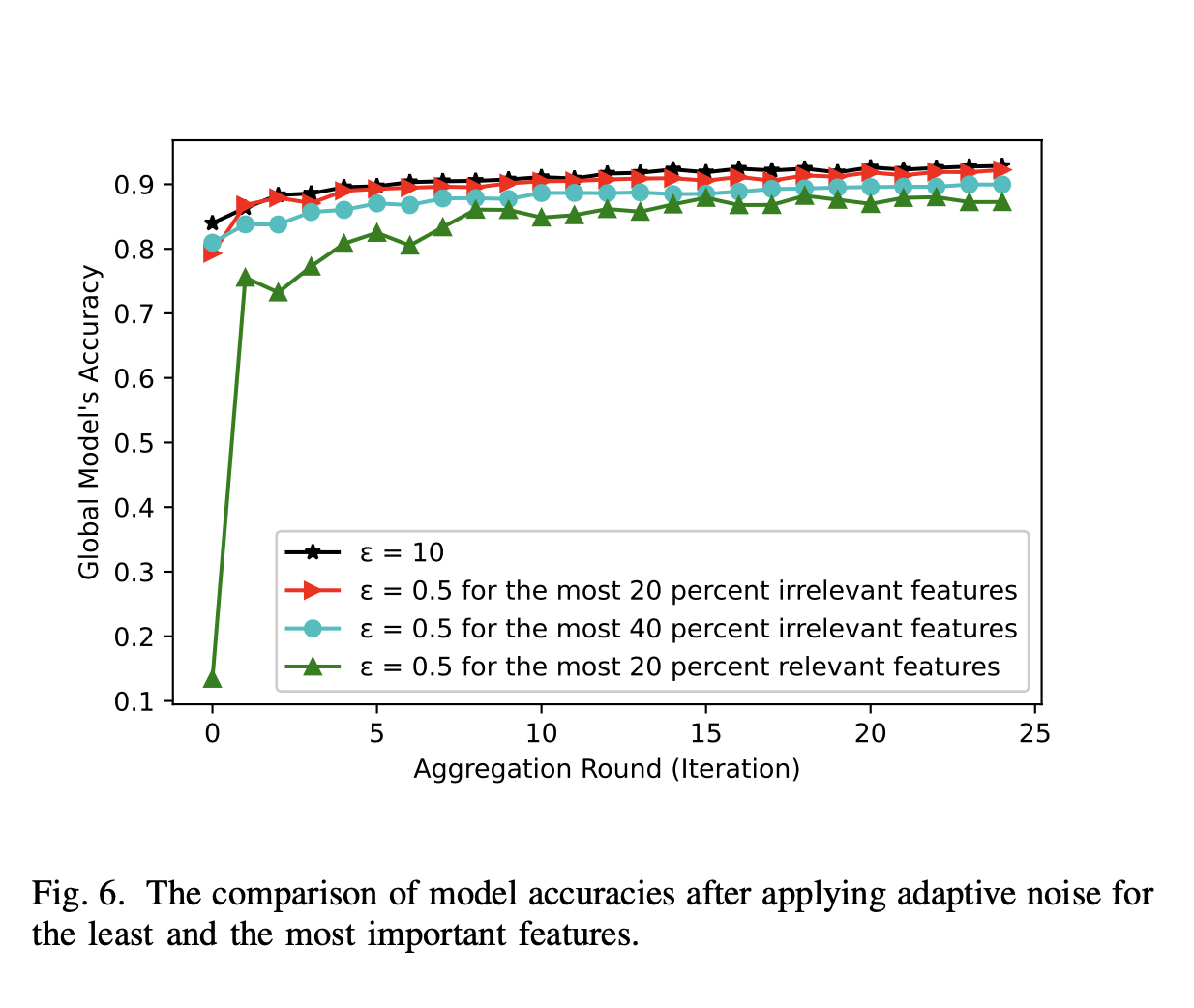

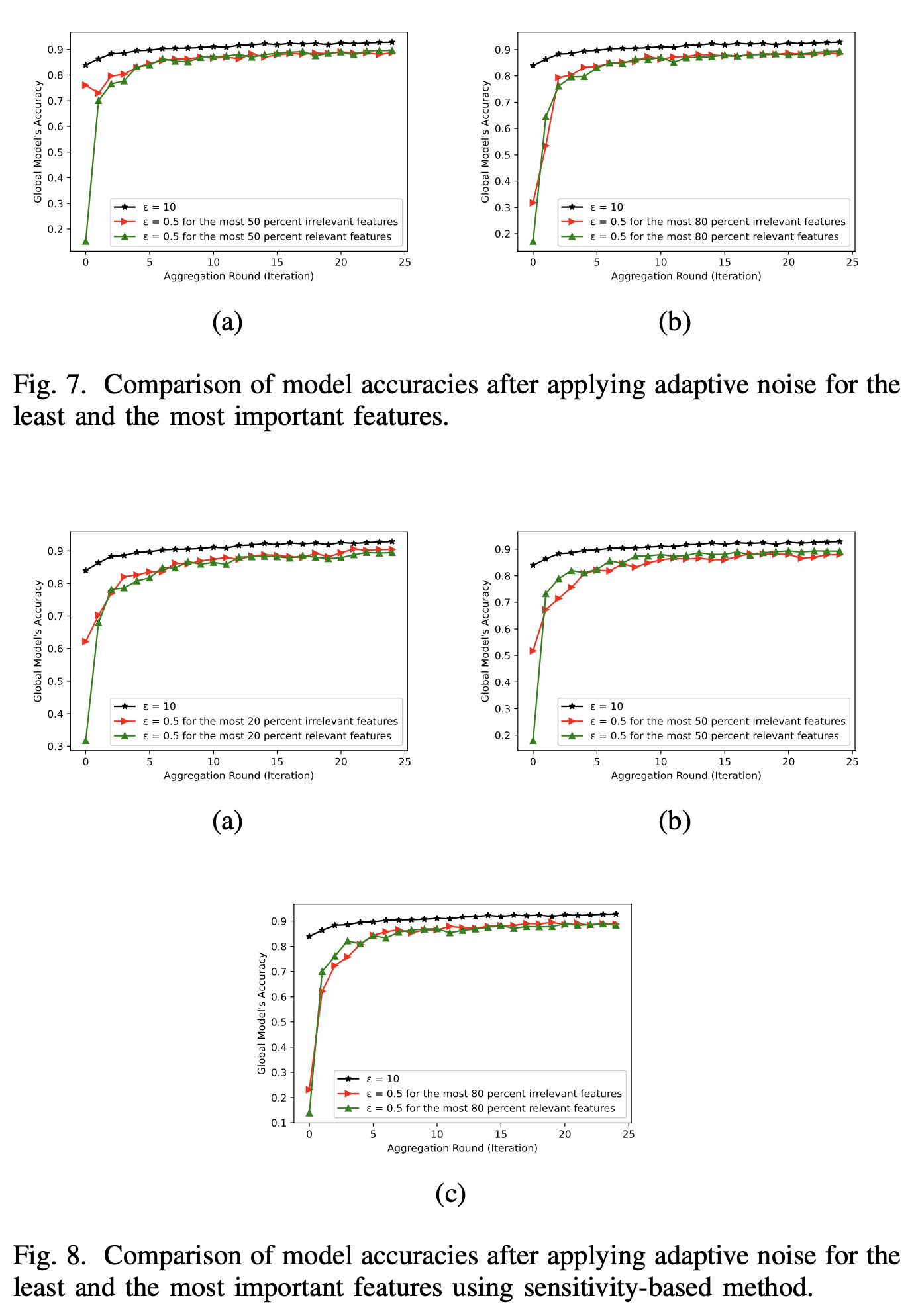

The authors propose an innovative method that adapts the noise injection process based on the relative importance of features and parameters. By strategically allocating more noise to less influential components, the adaptive framework minimizes the overall impact on model accuracy while maintaining strong privacy guarantees.

Determining Feature and Parameter Importance

To implement the adaptive approach, the paper presents two methods for assessing the importance of features and parameters in federated learning:

-

Sensitivity-based approach: This method measures the impact of perturbing individual features on the model’s output, identifying the most influential components.

-

Variance-based method: This approach assesses the change in parameter values during the training process, indicating the relative importance of each parameter.

Simulations and Results

The researchers demonstrate the effectiveness of their adaptive noise injection method through simulations using the MNIST dataset and multi-layer perceptron (MLP) neural networks. The results show that the proposed approach can maintain a high privacy level while preserving model performance, outperforming the traditional constant noise addition method.

Figure 1: Adaptive noise injection applied to the least and most important features, demonstrating the impact on model accuracy.

Figure 2: The effect of adaptive noise injection on model accuracy when applied to different proportions of important features, using the variance-based method.

The study highlights the importance of carefully selecting the noise amount, the proportion of parameters involved, and the number of iterations in the federated learning process. Considering the specific characteristics of the dataset is crucial for finding the optimal balance between privacy and accuracy.

Future Directions and Conclusion

The paper discusses potential avenues for future research, such as exploring the impact of intentionally adding irrelevant features or applying parameter-specific noise distributions. These ideas emphasize the ongoing efforts to refine and optimize privacy-preserving techniques in federated learning.

The adaptive differential privacy method proposed by Talaei and Izadi offers a promising solution to the challenge of balancing privacy and performance in federated learning systems. By intelligently allocating noise based on feature and parameter importance, their approach demonstrates the potential to maintain strong privacy guarantees while minimizing the impact on model accuracy. As privacy concerns continue to shape the development of machine learning technologies, innovative methods like this will play a crucial role in enabling the responsible use of distributed data for training powerful models.