Introduction

As the European Union’s AI Act moves closer to implementation, the machine learning community is grappling with how to adapt their practices to ensure compliance. A recent paper titled “Federated Learning Priorities Under the European Union Artificial Intelligence Act” dives into this issue, focusing specifically on the implications for federated learning (FL).

FL is a distributed approach that allows model training on decentralized data without that data ever leaving the client devices. This inherent privacy-preserving nature makes FL an intriguing candidate for building AI systems that align with the Act’s emphasis on “data protection by design and default.” However, the authors note that FL will need to overcome some hurdles to fully realize this potential.

Key Insights

The paper provides both quantitative and qualitative analysis to explore the intersection of FL and the AI Act. Some key takeaways:

-

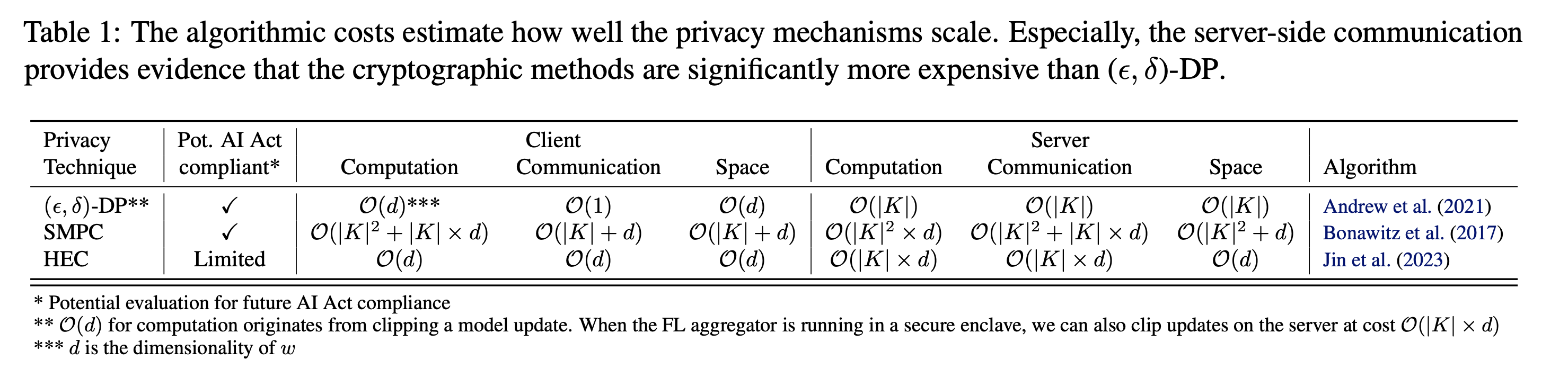

Performance trade-offs: Experiments showed that introducing privacy techniques like differential privacy and homomorphic encryption into FL pipelines comes with significant computational and communication costs. These overheads increase with the number of clients, posing scalability challenges.

-

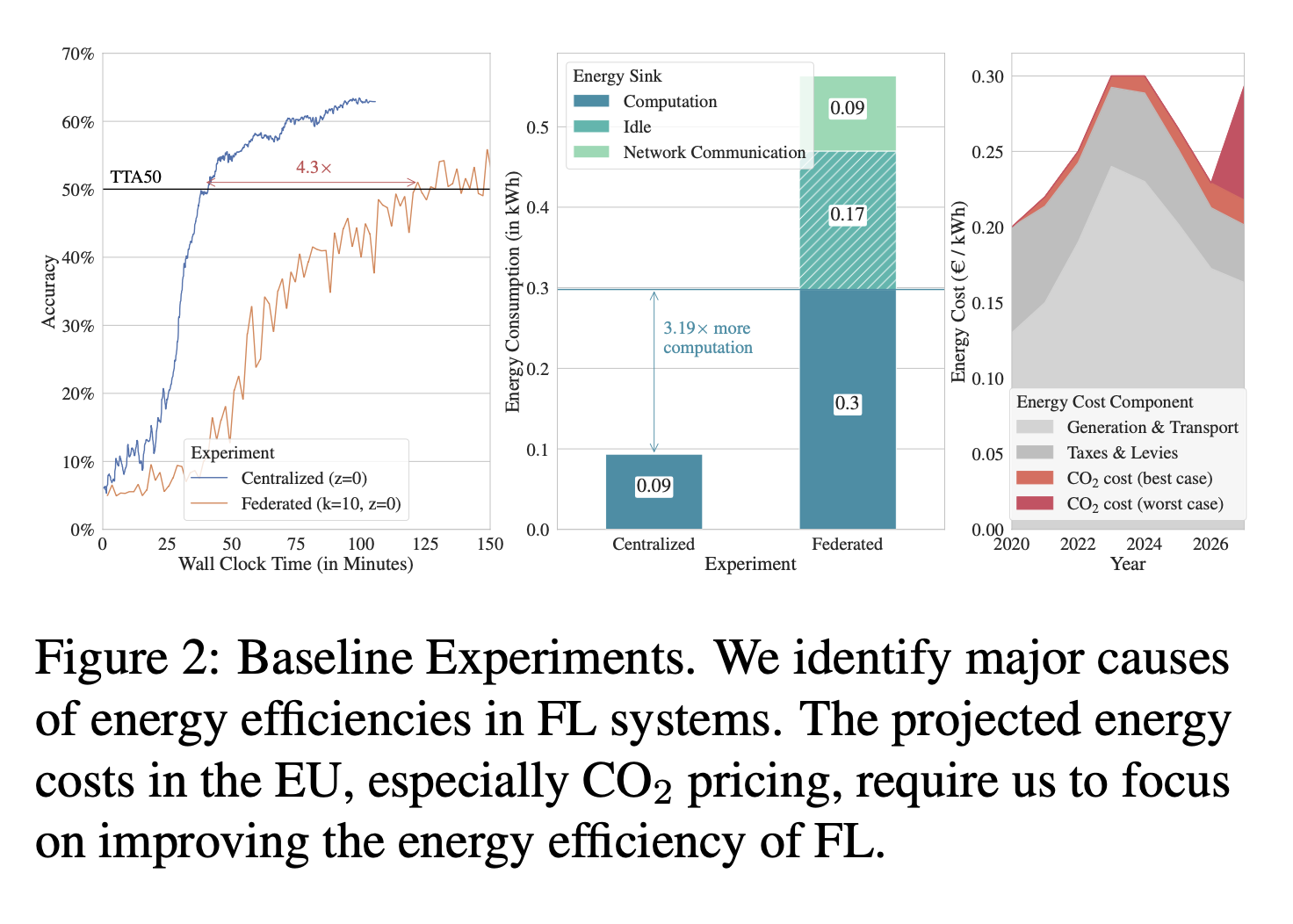

Energy efficiency gap: In the time-to-accuracy metric, FL was found to be 5 times less energy efficient than centralized training, with over a quarter of the energy going to communication costs. As the AI Act is expected to put forth energy efficiency standards, closing this gap will be crucial.

-

Validation and robustness: The Act’s robustness and quality management requirements mean FL systems will need frequent model validation. The paper’s experiments showed this leads to increased idle energy consumption by clients waiting for validation to complete before the next round can begin.

-

Data governance advantages: On the flip side, FL has some inherent advantages in data governance. Its decentralized nature allows access to more representative datasets across siloed clients and can help mitigate bias without the risks of data movement. The lack of direct data access also simplifies lineage tracking.

Future Directions

To position FL as a go-to approach for AI Act-compliant machine learning, the paper argues the research community will need to re-prioritize. Some key areas for focus include improving data quality management without direct access, optimizing FL energy efficiency to compete with centralized baselines, and creating compliance-focused frameworks that map FL privacy techniques to regulatory requirements.

The Road Ahead

The authors conclude that while challenges remain, FL is uniquely positioned to align with the robust, private, secure, and ethical AI development principles put forth in the EU AI Act. Proactive efforts by the research community now can help shape FL into a preferred solution for this emerging regulatory landscape.

As someone working in the field of machine learning, it’s encouraging to see this kind of proactive analysis on adapting to the coming wave of AI regulation. The FL community has an opportunity to lead the way in developing compliant and socially beneficial AI systems—but it will require focused, collaborative effort to get there. This paper provides a valuable starting roadmap.