Federated learning (FL) has become an important approach for addressing privacy and collaboration challenges in machine learning. However, simulating FL scenarios presents several difficulties, including handling decentralized data, accommodating diverse node types, and implementing customizable workflows. Many existing frameworks primarily focus on horizontal FL, leaving gaps in support for other FL categories and architectures.

To address these limitations, we present FLEX: a FLEXible Federated Learning Framework designed to provide researchers with maximum flexibility in their FL experiments. FLEX offers several key features to overcome the challenges associated with FL simulation:

1. Comprehensive Data Handling

FLEX includes a dedicated data module that allows researchers to model various data distributions across nodes. This module supports:

- Horizontal Federated Learning (HFL)

- Vertical Federated Learning (VFL)

- Federated Transfer Learning (FTL)

By providing tools to create and manage these different data partitioning scenarios, FLEX enables researchers to explore a wide range of FL applications and strategies.

2. Flexible Node Distribution

The actor module in FLEX allows for the creation of diverse FL architectures. Researchers can design and implement:

- Traditional client-server models

- Peer-to-peer networks

- Custom architectures to suit specific research needs

This flexibility accommodates the heterogeneous nature of FL nodes, allowing for more realistic simulations of real-world FL scenarios.

3. Customizable FL Workflows

FLEX’s pool module provides researchers with the tools to design and implement custom FL workflows. It offers multiple levels of abstraction:

- Low-level communication tools for fine-grained control

- High-level decorators for simplified implementation

- Primitives for quick prototyping

This multi-tiered approach allows researchers to balance ease of use with the need for detailed control over their FL experiments.

4. Framework Integration

FLEX is designed to work seamlessly with popular machine learning frameworks, including:

- PyTorch

- TensorFlow

- scikit-learn

This integration facilitates the development and testing of novel FL techniques using familiar tools and libraries.

Companion Libraries

To further enhance FL support across various machine learning domains, FLEX is accompanied by a suite of specialized libraries:

-

FLEX-Anomalies: Integrates anomaly detection algorithms for data analysis and purification in FL settings.

-

FLEX-Block: Implements blockchain architectures to enable decentralized FL environments, improving security and trust.

-

FLEX-Clash: Incorporates advanced adversarial attacks and defense mechanisms to enhance FL security.

-

FLEX-NLP: Provides datasets and implementations for natural language processing tasks in FL scenarios.

-

FLEX-Trees: Integrates decision tree models, including both single and ensemble approaches, for federated learning applications.

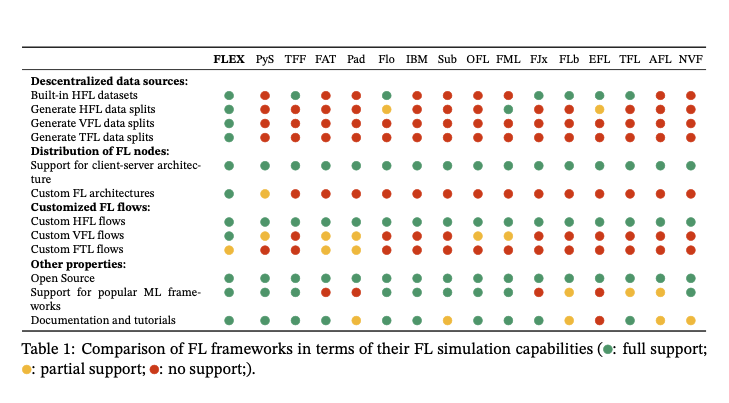

Comparison with Existing Frameworks

FLEX addresses several limitations found in current FL frameworks. The table below compares FLEX with other popular FL frameworks across key features:

As shown in the comparison, FLEX offers comprehensive support for various FL scenarios, including VFL and FTL, which are often underrepresented in other frameworks. This broad support aims to encourage more diverse and innovative FL research.

Availability and Documentation

FLEX is an open-source project available on both GitHub and PyPI. To support users in leveraging its capabilities, FLEX provides:

- Extensive documentation

- Detailed tutorials

- Code examples for common FL scenarios

These resources aim to make FLEX accessible to both experienced FL researchers and newcomers to the field.

Future Directions

The development of FLEX is ongoing, with plans to enhance its capabilities further:

- Improved FL simulation features

- Support for emerging FL workflows

- Advanced data partitioning techniques

A key goal for future versions of FLEX is to bridge the gap between simulated FL experiments and real-world deployments. This advancement will allow researchers to more easily transition their work from experimental setups to practical applications.

Conclusion

FLEX represents a significant step forward in federated learning research tools. By providing a flexible, comprehensive framework that addresses the complexities of FL simulation, FLEX aims to accelerate innovation in this important field. As privacy and collaboration continue to be critical concerns in machine learning, tools like FLEX will play an essential role in developing effective, secure, and privacy-preserving ML solutions.