Federated learning has become an important method for collaborative model training that preserves data privacy. SecureBoost, a popular federated tree boosting algorithm, uses homomorphic encryption to protect sensitive information during training. However, recent research has identified potential vulnerabilities in SecureBoost that could compromise the privacy of participating parties through label leakage.

To address this challenge, researchers from Beihang University and Webank have developed the Constrained Multi-Objective SecureBoost (CMOSB) algorithm. CMOSB aims to find optimal hyperparameter configurations for SecureBoost that achieve the best balance between model performance, training efficiency, and privacy protection.

Key Contributions

The research team has made several significant contributions:

-

CMOSB Problem Formulation: They have formally defined the CMOSB problem and created an algorithm to identify Pareto optimal hyperparameter solutions. These solutions simultaneously minimize three objectives: utility loss, training cost, and privacy leakage.

-

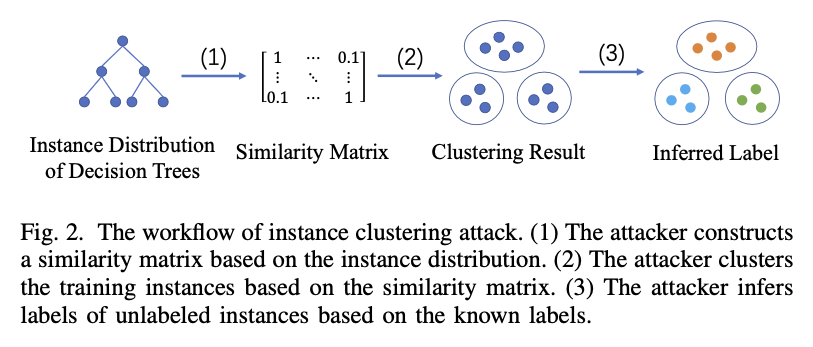

Objective Measurements: The researchers designed specific measurements for each of the three optimization objectives. This includes a new method to assess privacy leakage in SecureBoost called the Instance Clustering Attack (ICA).

-

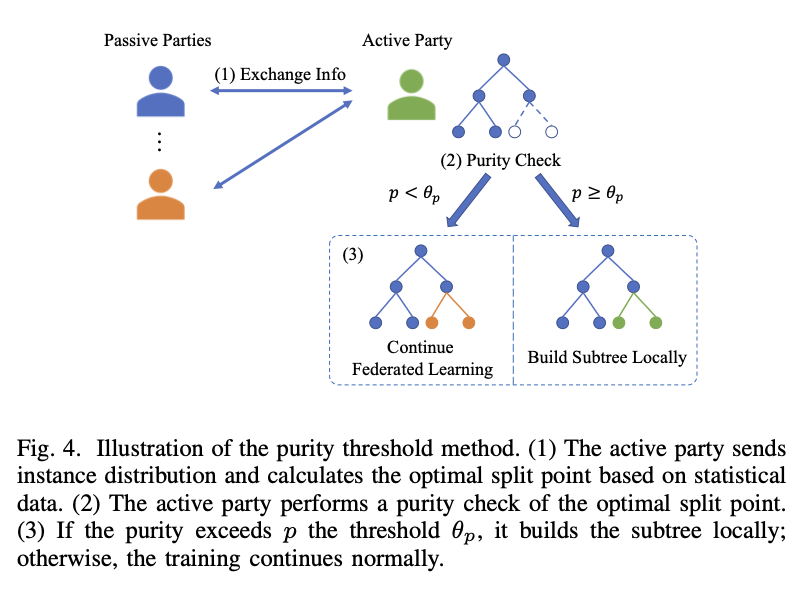

Privacy Protection Measures: To counter the ICA attack, the team proposed two strategies: local tree training and purity thresholding.

Instance Clustering Attack (ICA)

The Instance Clustering Attack is a novel approach to assess potential privacy leakage in SecureBoost. This method aims to infer sensitive label information by analyzing the clustering patterns of instances during the training process.

The image above illustrates the workflow of the Instance Clustering Attack. This attack method provides a way to quantify the privacy risks associated with different hyperparameter configurations in SecureBoost.

Countermeasures Against ICA

To mitigate the risks posed by the Instance Clustering Attack, the researchers proposed two main countermeasures:

-

Local Tree Training: This approach involves training decision trees locally on each participant’s data before sharing the aggregated results. By limiting the exposure of raw data, this method can reduce the effectiveness of clustering-based attacks.

-

Purity Thresholding: This technique introduces a threshold for the purity of leaf nodes in decision trees. By controlling the granularity of the tree structure, purity thresholding can help prevent overfitting and reduce the information leakage that attackers might exploit.

The figure above demonstrates how purity thresholding works. By setting a minimum purity threshold for leaf nodes, the algorithm can control the depth and specificity of the decision trees, thereby reducing the risk of privacy leakage.

Experimental Results

The research team conducted extensive experiments using multiple datasets to evaluate the effectiveness of CMOSB. The results showed that CMOSB consistently outperformed traditional hyperparameter optimization methods such as grid search and Bayesian optimization.

Key findings from the experiments include:

-

Superior Trade-offs: The Pareto optimal solutions obtained by CMOSB achieved better trade-offs between utility, efficiency, and privacy compared to state-of-the-art approaches.

-

Improved Privacy Protection: The proposed countermeasures (local tree training and purity thresholding) significantly reduced the success rate of privacy attacks while maintaining competitive model performance.

-

Efficiency Gains: CMOSB demonstrated the ability to find hyperparameter configurations that reduced training time without sacrificing model accuracy or privacy protection.

Implications for Federated Learning

This research has important implications for the field of federated learning:

-

Enhanced Trust: By providing a framework to balance model performance, training cost, and privacy protection, CMOSB enables more trustworthy applications of SecureBoost in real-world scenarios.

-

Practical Optimization: The multi-objective approach of CMOSB allows organizations to tailor their federated learning systems to specific requirements, whether prioritizing speed, accuracy, or privacy.

-

Improved Risk Assessment: The Instance Clustering Attack method offers a new way to evaluate privacy risks in federated learning systems, helping developers and users make informed decisions about deployment and configuration.

Conclusion

The Constrained Multi-Objective SecureBoost (CMOSB) algorithm represents a significant advancement in optimizing federated learning systems. By simultaneously considering model performance, training efficiency, and privacy protection, CMOSB provides a more comprehensive approach to hyperparameter optimization in SecureBoost.

As federated learning continues to gain importance in fields such as finance and healthcare, ensuring the security and efficiency of these systems becomes increasingly critical. The CMOSB algorithm and its associated techniques offer valuable tools for organizations looking to implement robust and privacy-preserving federated learning solutions.

Future research in this area may focus on extending the CMOSB approach to other federated learning algorithms, developing more sophisticated privacy attack models, and exploring additional countermeasures to further enhance the security of collaborative learning systems.